Knowing the different image types and image file formats is very important in image processing. These determines the amount and quality of data that you can extract from an image.

Digitized images are basically numbers converted to the colors you see on screen. The smallest unit of an image, called the pixel (picture element), correspond to one or more numbers that tells the computer what colors to show. The image type determines how the pixels in an image are assigned their colors. The four basic types of images are binary, grayscale, truecolor and indexed images.

Binary images contain only binary digits (bits): 0's and 1's, which translate to black or white per pixel. So that an image that is 256 x 256 pixels is only 256 x 256 bits = 65536 bits = 8192 bytes or 8KB (8 bits = 1 byte, 1 KB = 1024 bytes) when uncompressed. But let's leave compression for later.

|

| binary image |

Grayscale images are assigned a value from 0 to 255 per pixel which correspond to a shade of gray, where the extremes are black (0) and white (255). A pixel in a grayscale image is one byte.

|

| grayscale image |

One pixel in a

truecolor image is actually the combination of three colors: red, green, and blue. There are 256 (0-255) possible shades each of red, blue and green, that is overlaid to make the image of caps hanging on the wall (see below) look real. Three numbers assigned to one one pixel means 3 bytes per pixel. A truecolor image contains 3 matrices for the red, green, and blue shades.

|

| truecolor image |

The image below is something I made in Matlab before while playing around with the different colormaps available. Colormaps are shades of different colors with numbers assigned to each shade. Given any set of numbers (matrix type), the colors can be adjusted depending on the chosen colormap. This technique is very useful for visualizing data. Indexed images like this contain both the image data and colormap data.

|

| Indexed image. Flujet (from Matlab samples) using colormap Hot. |

|

| Matlab colormaps |

Other advanced image types include 3D images, high dynamic range images, multi or hyperspectral images and temporal images or videos.

Here's an example of a 3D image. The red, green, and blue shades are taken at different angles to produce an illusion of depth when viewed using special 3D glasses.

High dynamic range (HDR) images are very popular among photographers. HDR is actually a set of techniques combining a high resolution camera, lighting effects and image processing, that can produce crisp realistic images. HDR is great for images of scenery like the ones below.

|

| HDR image |

|

| HDR image |

Multispectral images are usually used for scientific work like satellite imaging to get geological or atmospheric data. Multispectral images are images with data gathered for various bands of wavelengths. Shown below are examples of images at the green band, red band, and near IR wavelength. Certain features of the land area taken are distinct only at certain wavelengths. Using images like these, the production of maps of bodies of water, forests, land use, and even rock or mineral types can be automated.

|

| green band |

|

| red band |

|

| near IR band |

Image File Formats

My digital camera can take images using 12.1 megapixels. That's (12.1 x 106) x 3 = 36.3 MB for one truecolor image! But of course, that is not the case. Humans are indeed witty. Someone bright thought of compressing the data stored in an image so that for one picture taken using my camera, I only get a 3 to 5 MB file based on the current setting.

|

UPPA org fair booth, June 27, 2011.

Clockwise from Tracy (*wink wink* everyone knows Tracy): Angel, Crizia, Josh, Ritz, Julia, Shiela, Aiko.

The little girl on the far left is Gil. She hates having her picture taken. |

And in comes the different file formats. According to

this list, there are over 500 different file formats you can use to save your image. The file size of the image depends on the format used. Different file formats compress the data stored in an image for easier distribution. For faster loading of images in a website, we'd want smaller files. Compression can either be

lossless or

lossy. Lossy compression cuts down on the file size by removing some data that may not be noticed at all. Of course, for work needing high resolution images like data gathering, compression must be lossless. Here's a table comparing five of the popular image file formats:

.tif or .tiff Tagged Image File (TIFF) | May use lossless compression depending on the graphics program used. TIFF files are usually saved with no compression at all. |

.png Portable Networks Graphics (PNG) | Uses lossless compression by using an algorithm (the same one used in .zip files) that looks for patterns in the images. |

.jpg or .jpeg Joint Photographic Experts Group (JPEG) | JPEG uses lossy compression by removing image data that could go unnoticed. This format is very popular for large image distribution especially online. |

.bmp Bitmap Image File (BMP) | BMP is a format made by Microsoft for MS Paint that saves image files as is, pixel by pixel, without compression. |

.gif Graphics Interface Format (GIF) | GIF compresses an image file by making a colormap of only 256 colors (or less, depending on setting) from all available colors in an image. Thus, compression may be lossless or lossy depending on the original number of colors available. |

For a more in-depth and technical comparison, click here.

The term bitmap (not of BMP) actually refers to one of the two graphic types, the other one being vector, and is also better known as raster. Raster graphics contain dots of colors to form an image. It is easier to talk pixels as in raster graphics, one dot = one pixel. Vector graphics are quite uncommonly used compared to raster images. Instead of dots or pixels, it actually contains a set of instructions on how to make an image, which makes vector graphics resolution-independent. Vector graphics are usually found on programs like Microsoft Word (Shapes and Fonts) and CorelDraw.

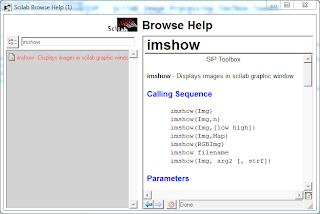

In Scilab, we can determine the image type and other properties of an image by using the function imfinfo() from the siptoolbox. I listed the properties of some images I have below:

| FileName: INDEXED.chimchar.gif FileSize: 3607 Format: GIF Width: 80 Height: 80 Depth: 8 StorageType: indexed NumberOfColors: 256 ResolutionUnit: centimeter XResolution: 72.000000 YResolution: 72.000000 |

| FileName: INDEXED.hot flujet.bmp FileSize: 150626 Format: BMP Width: 435 Height: 343 Depth: 8 StorageType: indexed NumberOfColors: 256 ResolutionUnit: centimeter XResolution: 0.000000 YResolution: 0.000000 |

| FileName: HDR.welcome.jpg FileSize: 261447 Format: JPEG Width: 900 Height: 509 Depth: 8 StorageType: truecolor NumberOfColors: 0 ResolutionUnit: inch XResolution: 300.000000 YResolution: 300.000000 |

| FileName: dice.png FileSize: 58618 Format: PNG Width: 320 Height: 240 Depth: 8 StorageType: truecolor NumberOfColors: 0 ResolutionUnit: centimeter XResolution: 72.000000 YResolution: 72.000000 |

Knowing the image type and format can help one take advantage of the features of an image.

Note: All (but one) sample images used for this post are not mine. Unfortunately, my laptop crashed the other day, so I lost most of notes I have for this post, including the references. I will post the links to the images as soon as I find them again.

__________________________________________________________________________________

9 for effort. I didn't like this activity. Too much repetitive actions needed. -.-